Paper Reading: "W!NCE: Unobtrusive Sensing of Upper Facial Action Units with EOG-based Eyewear"

One Line Summary

W!NCW developes a two-stage processing pipeline which can do continuously and unobtrusively sensing of upper facial action units with high fidelity. Because it doesn’t use camera so it also eliminate the privacy concerns.

Terms

- Electrooculography(EOG, 眼球电图检查): A technique for measuring the corneo-retinal standing potential that exists between the front and the back of the human eye. The resulting signal is called the electrooculogram. Primary applications are in ophthalmological diagnosis and in recording eye movements.

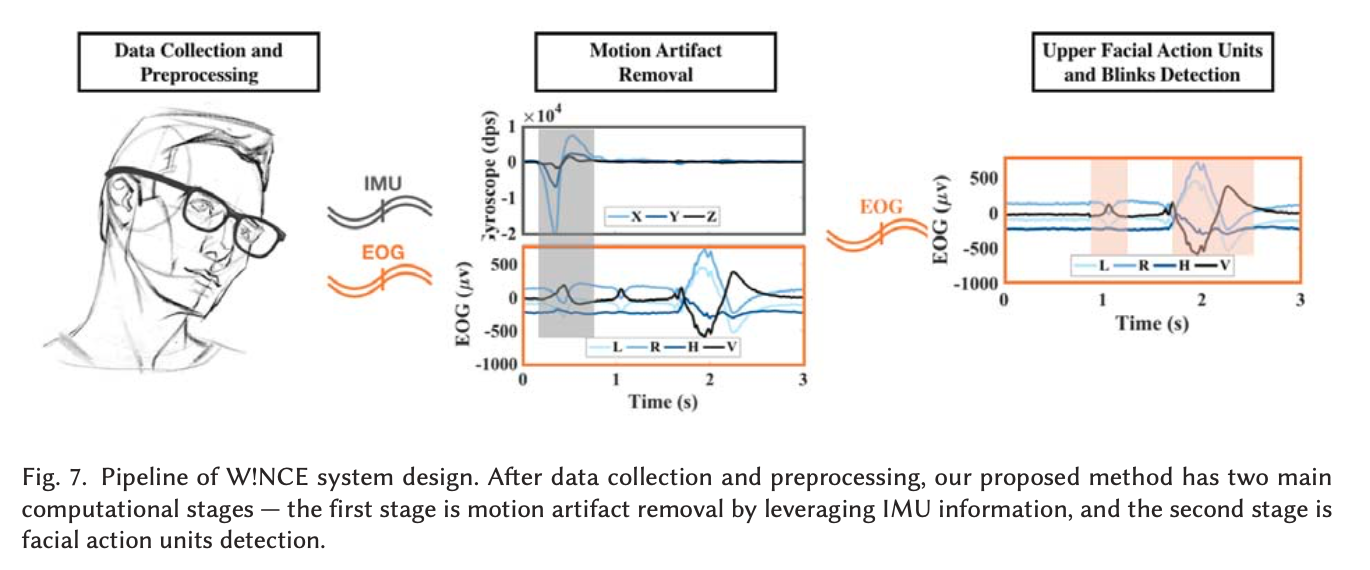

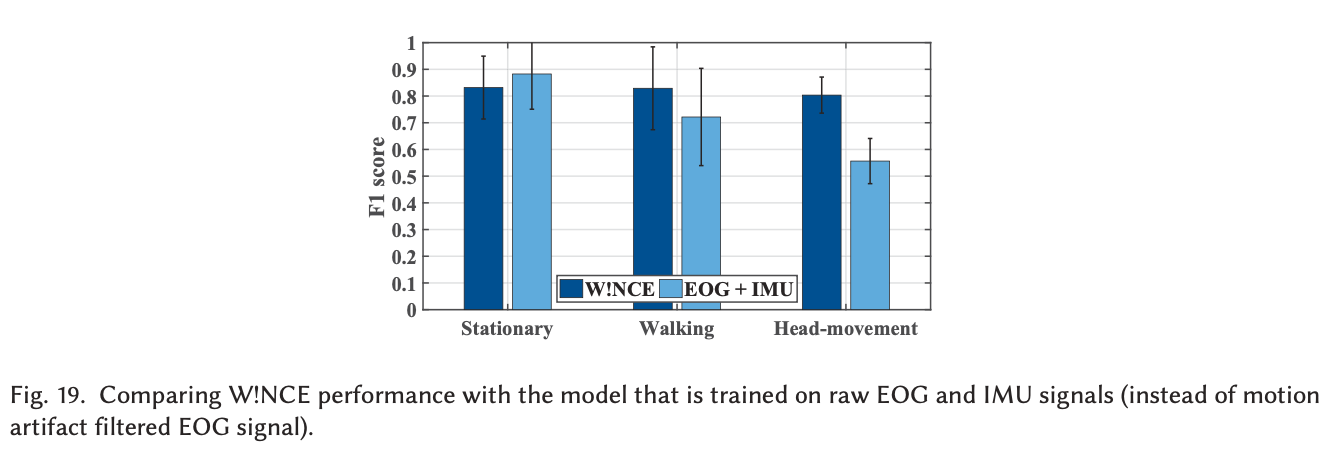

- Motion artifacts removal pipeline: Mainly used to remove noise across multiple EOF channels and many different head movement patterns. It is based on neural network.

Points

- There are already standard Facial Action Coding System(FACS) along with camera based methods which can be applied to check facial expressions, but their positioning is awkward and they may bring in privacy problems.

- The hardware is not a lab-product, rather, it is based on commercially available comfortable daily eyeware device J!NS MEME.

- EOG metrics is useful for recognizing different types of activities such as reading and writing. since each activity has its own unique eye movement pattern.

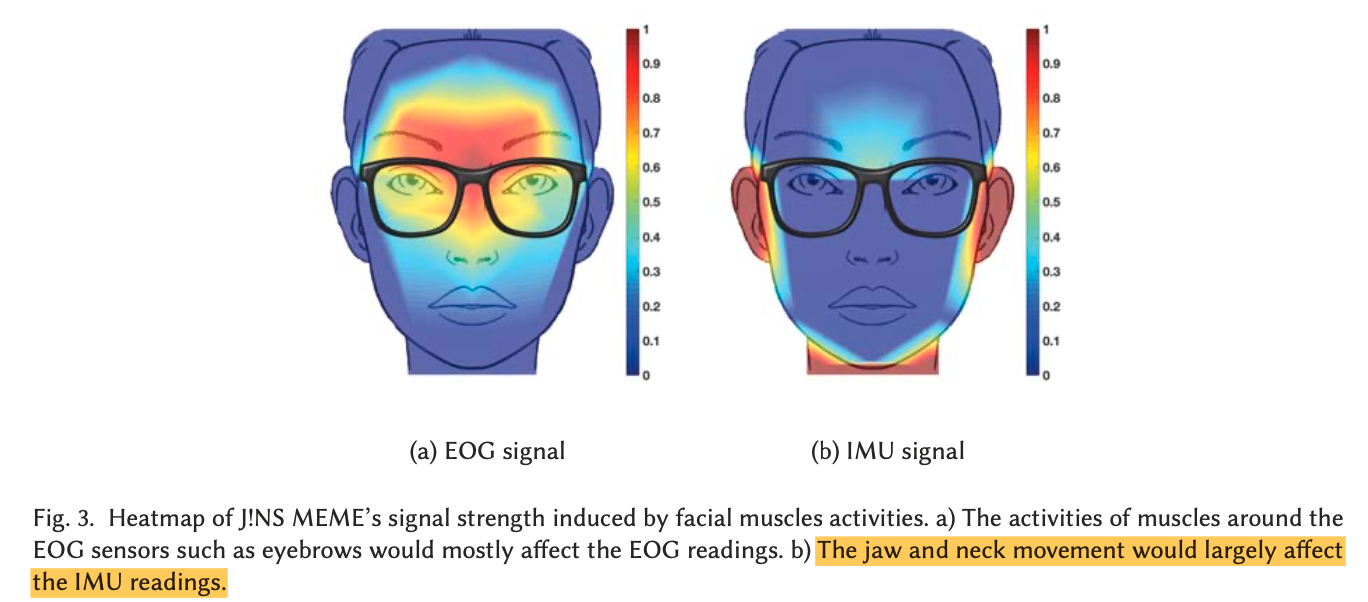

- The EOG sensors are placed on the nose and the IMU sensor is embedded in the temples of the eyeglass.

- W!NCE takes the body motion into consideration, while existing work work in motion artifact removal from physiological signals couldn’t do so.

- The lower face action is harder to be detected because the signal are generated in distant muscles, so it will be damped when reaches the sensor.

- J!NS MEME employs stainless steel eletrodes which belong to the stiff material dry eletrodes. It has a lower price and a good electrical performance and lower possiblility of skin irritation compared to gel-based ones.

- Some actions of heads will cast a similar influence on EOG sensors(like nod and lower eyebrows), while the IMU signals will be quite different, which can help confirm the real action.

- We have to consider the signal variation across individuals, since the face shape, the shape of nose-bridge, the fit of the glasses behind the ear, the variation in the way individuals use upper facial muscles influence the signals captured greatly.

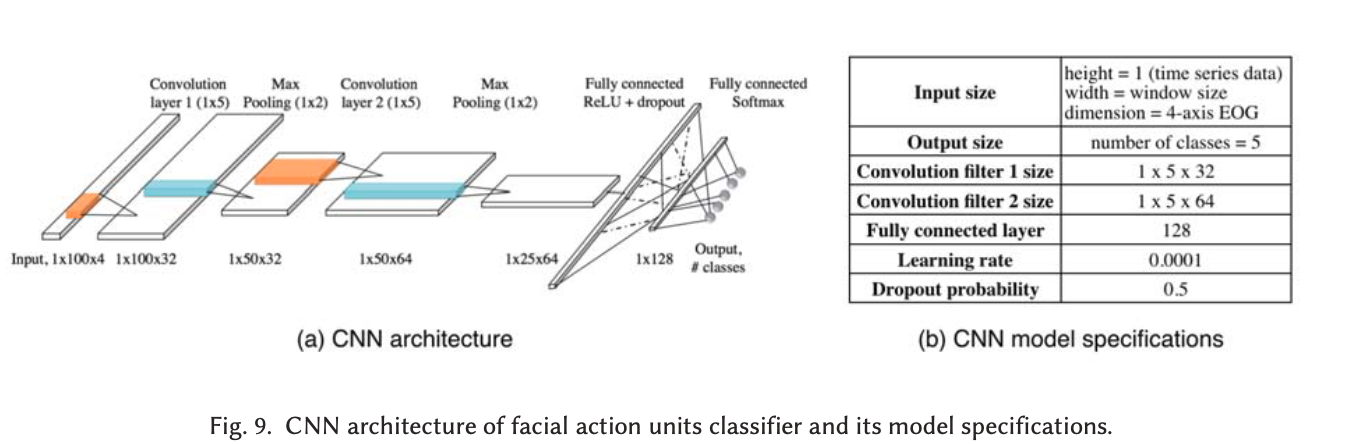

- Personalizing with transfer learning is utilized to address the problem above. The device will take some labled data from user when they use it for the first time, and only re-train the last layer(full-connection layer).

- The CNN model will not always be in the working state. In fact, the model process will only be triggered when substantial EOG activities are detected after the motion artifact removal stage. Also, the motion artifact removal model will only run when significant variation is observed in the raw EOG signal.

Question

- What if user sweet on there nose? Will it affect the accuracy of EOG sensor?

- This eye-glass based design will be easy to accommodate for those who always wearing a glass, but to the others who don’t have the habit, it might be difficult.

- For the CNN and motion artifact removal trigger, for the emotions or movement which only generate minor signal, like the lower face action, will it be detected?

- How to know whether the prediction is right or not? User may express multiple emotion and movement at the same time. Same question when dataset is collected.

- Why people will need, or need to buy this product? Will a normal person have the requisition to know their facial action and emotion all day? If so, what can the data collected derive?

Images